- Using a camera to make a video of the background, with the camera moving around. With a moving background, inserted CG objects would have to track features in the background to seem like they belong in the scene, showing the same motion and relative size and rotation no matter how the camera moves. To make this a complete project, some more challenge would need top be added - which I'll try to think of in the next few days, before our starting meeting on Thursday, February 2nd.

- The fourth could be "reversed", having a background made in CG and moving objects - such as humans walking around - be inserted in the CG scene. This is too much like the old People Tracker project we made earlier though, so probably wouldn't be interesting to make.

Sunday, January 29, 2006

The fourth and fifth options

Looking at the options from January 25th and 26th, I am now considering a few more options for combining Computer Graphics and Computer Vision in a thesis project:

Thursday, January 26, 2006

The next option

One option I recently thought of for the thesis application, is a tool for making movies. Or rather, to make "monster movements" in movies.

- The idea would be to create a person tracker - which we've done before - which also tracks the movement of person parts, like for example the arms, or maybe even the single fingers. This would of course be a more difficult project than most other options, considering what the software would have to tak into account to track a persons bodyparts. Not only can the parts suddenly become hidden - like when a person turns around and one person is behind him or her, seen from the camera position - but it would also have to take into account such things as shadows changing and clothes having colors similar to the background... Of course, an option would be to have a one colored screen behind the person, which can be replaced by the movie scene, but what if you're on a low budget and don't have an actual studio - this could instead be a tool for the everyday person, creating a home movie and then altering the look of any person on the screen, whereafter the new look follows in the place and with the rotation of the person who was replaced.

Wednesday, January 25, 2006

End of project - long live the (next) project

After the exam for the project I mentioned in the previous post, I noticed that there's an important improvement I missed out on when considering the future work:

If inserting one object into a background - or simply on some kind of ground plane seen from a perspective view - of course we need to know the normal of this plane to find "which way is up?". This part is simple and could be found either from the image of the absolute conic with the horizon (often referred to as the vanishing line), or by finding the projected intersection of parallell lines in all directions. This is something we thought of doing, with varied success in aligning object and image normals. What we didn't consider in the project was, that there's not only an "up direction" for an object, there's of course also the direction in which the object faces/looks. To be able to rotate the object together with the plane it stands on, we can use a marker on the plane - such as an arrow - to find out how to rotate the object which has been inserted on top.

Of course, more - and more through - explanations of this can be seen in litterature such as the ones we have used most frequently:

The direction of the inserted object becomes especially interesting if we have for example a live video feed, where any object in the scene - the background - can be rotated at any given moment. This is another possible approach to the thesis project. We are at this point considering the following thesis projects:

If inserting one object into a background - or simply on some kind of ground plane seen from a perspective view - of course we need to know the normal of this plane to find "which way is up?". This part is simple and could be found either from the image of the absolute conic with the horizon (often referred to as the vanishing line), or by finding the projected intersection of parallell lines in all directions. This is something we thought of doing, with varied success in aligning object and image normals. What we didn't consider in the project was, that there's not only an "up direction" for an object, there's of course also the direction in which the object faces/looks. To be able to rotate the object together with the plane it stands on, we can use a marker on the plane - such as an arrow - to find out how to rotate the object which has been inserted on top.

Of course, more - and more through - explanations of this can be seen in litterature such as the ones we have used most frequently:

- Introductory Techniques for 3-D Computer Vision (Trucco, Verri)

- Multiple View Geometry in Computer Vision (Hartley, Zisserman)

The direction of the inserted object becomes especially interesting if we have for example a live video feed, where any object in the scene - the background - can be rotated at any given moment. This is another possible approach to the thesis project. We are at this point considering the following thesis projects:

- A board game played with people in different places, using simple bricks with letters - or other clear markers - to play with. The game would be played using a camera which sends a live video feed from one player to another. This feed would be accompanied by CG models, which would replace the bricks on the board, to make it look more interesting and fun. For example, one player could put up a brick showing a brick with an 'M', which the software interprets as a monster brick, thereby displaying an animated monster on the screen, which will move and rotate in the same way the board does relative to the camera. The other player (or perhaps the computer AI), could then find a reason to put the 'W' brick up, which suddenly grows a wall on the screen, in the position of the brick....

- The other approach is basically what I've outlined as future improvements of the previous project. This includes image rectification, putting these images as textures on a 3D model, derived from information in photo's. Interaction in this version would be to combine several images into for example one big ground, ending by a castle in one direction and at the courtyard entrance in another. There would of course be a few challenges, which I'll report closer if deciding on this approach. Either way, in the end this approach would give a virtual world in which people could "walk around", talk (either by text or microphone) and perhaps even find out curious facts about the surroundings - which of course in the could give a commercial value both for museums, city planners, architects and so on, there's no end to the possibilities!!

Monday, January 23, 2006

Improvements of old project

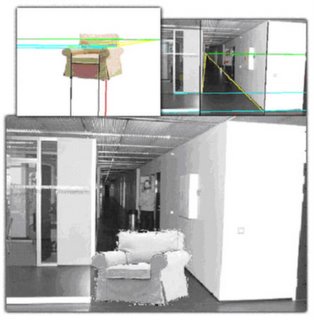

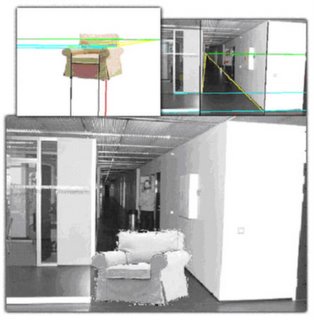

In the autumn, we created an algorithm for introducing objects (such as a chair) into a background image (for example a room) - This was done by simply finding the horizon and then calculating a vanishing point (a point in an image where real world parallell lines converge) from that, and then align the horison and vanishing point of object and background by rotating and skewing the object. The result of one test can be seen at the end of this post.

One possible thesis approach is to improve the results of this project, for example in the following ways:

Improve object angles

One possible thesis approach is to improve the results of this project, for example in the following ways:

Improve object angles

- Edge detection of multiple parallell lines, followed by Maximum Likelihood estimate.

- Image rectification of both object and background, thereby making all world parallell lines parallell in the images as well.

- Blur object edges.

- Better edge detection.

- Analyze and align illumination parameters such as color and direction, of object and background.

- Cast shadows from inserted object on background objects.

Sunday, January 22, 2006

Thesis thoughts

I've started considering which subjects could be interesting for my thesis. The projects and courses I've had in my M.Sc. of IT have had a main focus on Computer Graphics (three courses, both theoretical and practical with programming and the wysiwyg tool 3ds Max) and Computer Vision (three projects).

I've ordered a couple of books (C# Complete and AJAX in Action

and AJAX in Action - the AJAX book will probably not be part of the thesis, but I'll use it for work, and maybe for a later version of the tool I create in the thesis)

- the AJAX book will probably not be part of the thesis, but I'll use it for work, and maybe for a later version of the tool I create in the thesis)

On the side, I'm currently working on some projects involving danish municipalities who check the quality of their healthcare. This will be done using a questionnaire which I finished building the admin interface for yesterday.

Another project I have my own test server up and running, using Apache, PHP and MySQL - with PHPMyAdmin as admin interface. This is where I'll test AJAX, and see how interesting it actually is.

I've ordered a couple of books (C# Complete

On the side, I'm currently working on some projects involving danish municipalities who check the quality of their healthcare. This will be done using a questionnaire which I finished building the admin interface for yesterday.

Another project I have my own test server up and running, using Apache, PHP and MySQL - with PHPMyAdmin as admin interface. This is where I'll test AJAX, and see how interesting it actually is.

Subscribe to:

Comments (Atom)